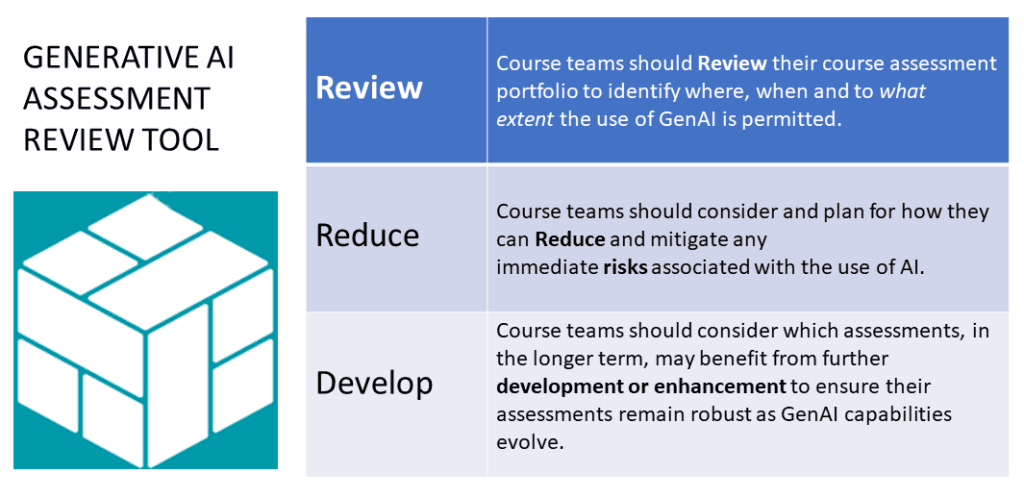

Our generative AI assessment review model

We propose a simple review model for course teams and departments to review their coursework in the light of GenAI and to help them to make plans for ongoing development. This has been shaped by the work of both academic and professional services colleagues at Bath, who are already engaging with and adapting their approach to assessment design and development. The review tool also builds on the guidance developed by Lodge et al at the University of Queensland and UCL.

The three steps below are designed to support course teams to review, adapt and set assessments and to ensure that for each piece of assessment, staff and students have a shared understanding of the extent to which GenAI tools can be used and how much, and where, in the assessment process. Once Step 1 is complete, we recommend that staff inform students as soon as possible students which category that their assessments falls and to reinforce any expectations around the use of GenAI. A key message is that whatever approach is adopted, in all cases the work must remain that of the student – they are in the driving seat and retain responsibility for their work.

Step 1: Review assessments

Course teams should Review their course assessment portfolio to identify where, when and to what extent the use of GenAI is permitted.

- Consider the impact of GenAI on your course ILOs, and the strengths and weaknesses of different assessment types.

- Identify which of the three categories listed below current coursework falls into and communicate this to students as soon as possible.

- Plan which assessments may require longer-term changes (e.g. changing assessment type) and speak to the CLT and/or Registry as needed.

- Action any immediate short-term opportunities to assessments (e.g. formative opportunities, introducing scenario-based questions or designing fictional scenarios so that students are unable to enter direct questions into GenAI tools).

Assessments should be categorised in terms of where the use of GenAI:

Type A: Is not permitted.

Type B: Is permitted as an assistive tool for specific defined processes within the assessment and its use is not mandatory in order to complete the assessment.

Type C: Has an integral role, the use of GenAI is mandatory, and is used as a primary tool throughout the assessment process.

(adapted from guidance produced by UCL)

Learn More

Step 2: Reduce risk

Where course teams identify assessments which either currently (or in the future) do not permit the use of AI (Type A), teams should consider and plan for how they can Reduce and mitigate any immediate risks associated with the use of AI. This may require short or longer-term changes to their assessments. For Types B & C, pay particular attention to communicating clear expectations and strengthening messaging around good academic integrity.

- Consider opportunities to strengthen messaging within the course around expectations around academic integrity and academic citizenship.

- Identify how course teams can support students to better develop their ethical and effective use of GenAI in the context of their discipline.

- Plan how you will communicate expectations as a course team to students around the use of GenAI in coursework.

- Action any additional layers of safety (e.g. formative vivas) which can both help to reduce risk and develop students’ AI literacy.

Step 3: Develop opportunities

Course teams should also consider which assessments, in the longer term, may benefit from further development or enhancement to ensure their assessments remain robust as GenAI capabilities evolve. Again, the above 3 categories can be used to consider where future assessments may best ‘sit’ to ensure the learning outcomes are met and the ethical use of generative AI is maintained.

- Consider where GenAI can either be incorporated as an assistive tool or integrated into the assessment process more deeply.

- Identify the opportunities of embedding GenAI more deeply into assessment design across the course, and the skills and support required to both teach and assess GenAI effectively.

- Plan for how the course team will continue to investigate and share opportunities in GenAI in their disciplinary context.

- Action any changes to assignment briefs/marking criteria as necessary.